Dr Rick Skarbez, a computer scientist from La Trobe University, recently co-wrote an opinion piece entitled It Is Time to Let Go of ‘Virtual Reality published in Communication of the ACM. It’s a timely provocation that argues that virtual reality (VR) and augmented reality (AR) are subsets of mixed reality (MR). I realise this is a lot of acronyms, but it is an argument that deserves unpacking, especially in light of advances in VR hardware that allow for virtual objects (holograms, 3D models) to be placed and interacted with in the real-life environment of a person using the equipment.

Of course, there has been MR reality headsets This includes HoloLens, Magic Leap and the forthcoming Apple Vision Pro that fit within the mixed reality category even though these are sometimes referred to as AR. All this is very confusing because ideas about MR have morphed over time. For example, I’ve read papers that describe MR experiments that involve adding real life objects (tangibles) as part of virtual reality environments. An example of this might be using real objects that a VR system associates with 3D models recognisable in the real world; that is, it associates an ordinary box that a user may pick up with a 3D model of a house that the user can than interact with in a fully realised (synthetic) VR environment.

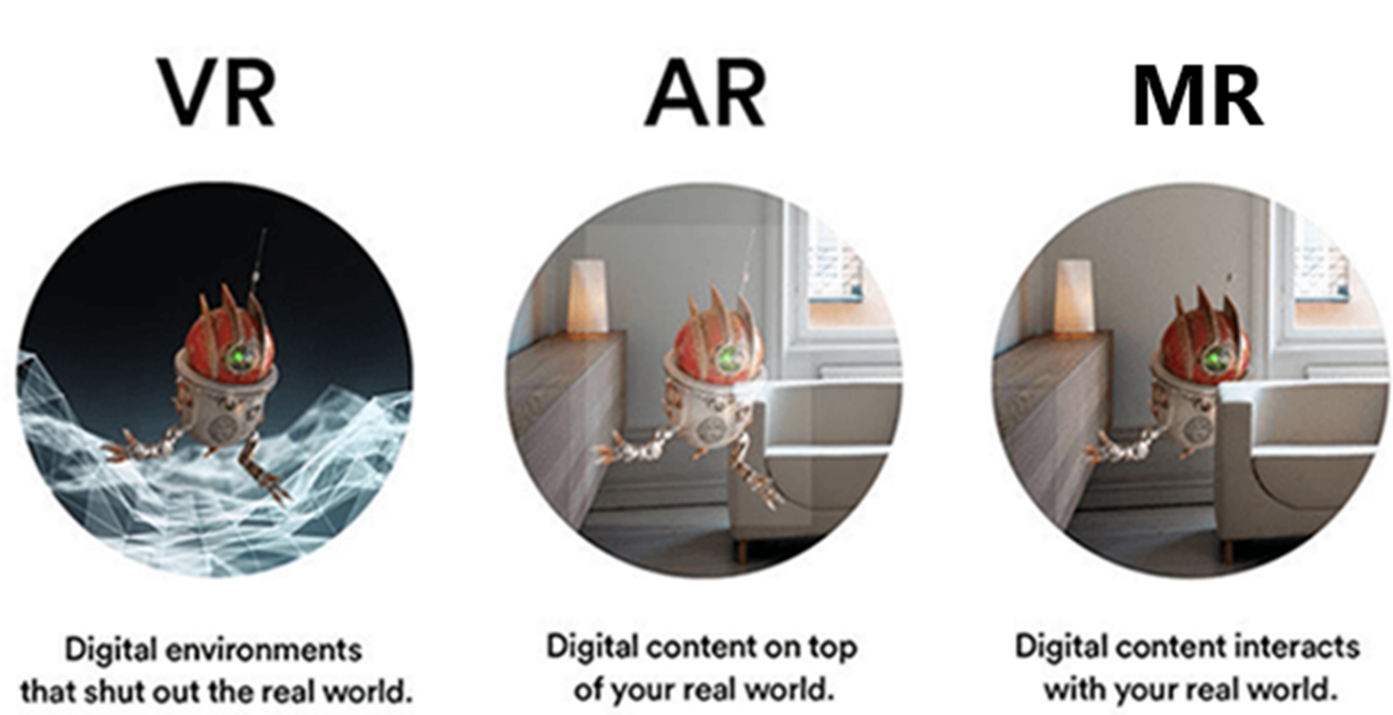

There have also been other ways to explain the difference between AR, VR and MR, like this infographic:

The above graphic adapted from https://www.mobileappdaily.com/2018/09/13/difference-between-ar-mr-and-vr

Or this more detailed explanation:

Some headsets now have passthrough cameras which record and track, in real time, the user’s environment and, like a live stream, render it in front of the user’s eyes in the headset. This means that virtual 3D objects can then be seemingly overlayed on the user’s real environment allowing them to interact with it increasingly with hand and voice controls and gestures, although hand-held controllers are still commonly used. This might allow you to put a virtual alpaca in what looks like your lounge room and, depending on the sophistication of the programming of the virtual beastie, interact with it in a playful manner. This is mixed reality because it blends the real (your lounge room) with the virtual (the synthetic alpaca) that is programmed to respond to you and your space. Another example is the use of MR headsets such as HoloLens to teleoperate robots with varying levels of autonomy through gestural control. The mixed reality headset manufacturer Magic Leap offers this useful overview of the difference between AR, VR, and MR in terms of user experience:

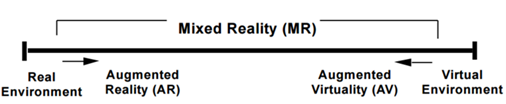

Traditionally, many researchers have used the reality-virtuality continuum from Milgram and Kishino’s (1994) Taxonomy of Mixed Reality Displays (diagram below).

Some university and industry commentators have introduced eXtended Reality (XR) as an umbrella term for the AR, VR and MR. The term “immersive” is also used to encompass the technologies as in immersive education. Others, such as Apple, have gone back to the technical language of spatial computing.

For the non-technical person, it continues to be a confusing terminology mess. The melding of AR/MR capability (via passthrough cameras) into what are conventionally thought of as VR headsets has prompted a rethink.

To return to Skarbez et. al. (2023) who argue that the term mixed reality should be used as an “organizing and unifying concept… (to) harmonize discordant voices” in the ongoing “terminology wars” of the field (p. 41). They have previously written an article that reworks Milgram and Kishino (1994) reality-virtuality continuum. In these writings, they suggest that all technology-mediated realities are mixed reality because it is now common to have environments “in which real-world and virtual-world objects and stimuli are presented together… as a user simultaneously perceive both real and virtual content” (Skarbez et. al. 2023, p.42). Their articles present both potential positive and negative outcomes from moving to a mixed reality umbrella and there is certainly the need to consider what may be lost without the precision of distinguishing between types of technologies.

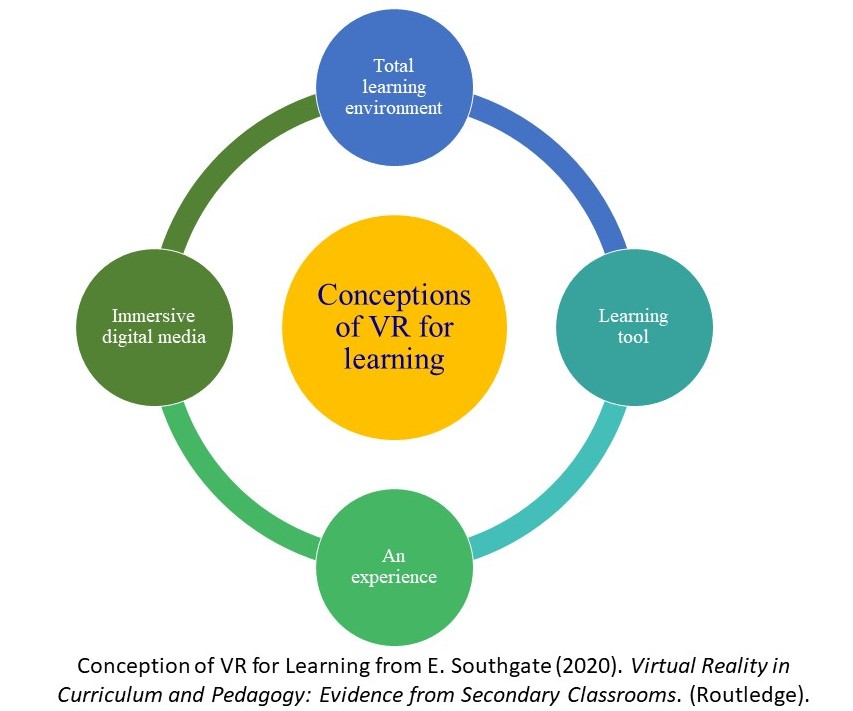

From an educational perspective AR, VR and MR have some similar but also quite different learning affordances (or properties that can enable educational experiences). Likewise there are common and unique ethical considerations now that AI is integrated into immersive technologies.

I will be having a videoed conversation with Rick Skarbez on his ideas on the future of immersive technologies and their terminology in January 2004 and posting this to the VR School website, so stay tuned.

This post brought to you by A/Prof Erica Southgate who is an alphabet soup kind of person who simultaneously uses the terms VR, MR, XR, metaverse and immersive technologies.

References

Milgram, P., & Kishino, F. (1994). A taxonomy of mixed reality visual displays. IEICE TRANSACTIONS on Information and Systems, 77(12), 1321-1329.

Skarbez, R., Smith, M., & Whitton, M. C. (2021). Revisiting Milgram and Kishino’s reality-virtuality continuum. Frontiers in Virtual Reality, 2, 647997.

Skarbez, R., Smith, M., & Whitton, M. (2023). It Is Time to Let Go of ‘Virtual Reality’. Communications of the ACM, 66(10), 41-43.

Cover image from Art with Mrs Filmore, 1st Grade– “Mixed media alphabet soup!” https://www.artwithmrsfilmore.com/tag/alphabet-soup-art-lesson/